주피터 노트북 사용법

파일확장자 (.ipynb)

커널선택 -> 생성했던 가상환경 선택

프린트를 계속해서 찍어보지 않아도 됨

========================================================================================

1. LangChain 사용

LLM 모델 호출 (text-davinci)

chat 모델 호출 (gpt-3.5-turbo) <- text-davinci의 1/10 가격, chat에 최적화된 모델, 더 최신 모델임

from langchain.llms.openai import OpenAI

from langchain.chat_models import ChatOpenAI, ChatAnthropic

llm = OpenAI()

chat = ChatOpenAI()

a = llm.predict("How many planets are there?")

b = chat.predict("How many planets are there?")

a, b

OpenAI와 ChatOpenAI는 기본적으로 환경변수를 OPENAI_API_KEY를 사용함

다른 변수를 사용할 경우 아래 오류가 나올 수 있음

ValidationError: 1 validation error for OpenAI __root__ Did not find openai_api_key, please add an environment variable `OPENAI_API_KEY` which contains it, or pass `openai_api_key` as a named parameter. (type=value_error)

========================================================================================

2. gpt-3.5-turbo 모델을 사용할 예정 주피터 노트북에서 아래와 같이 코드 실행

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI(

temperature=0.1

)

SystemMessage는 역할 부여

AIMessage는 gpt

HumanMessage는 질문

아래 코드를 실행하면 대화처럼 다음 답변이 나옴

from langchain.schema import HumanMessage, AIMessage, SystemMessage

messages = [

SystemMessage(content="You are a geography expert. And you only reply in Italian."),

AIMessage(content="Ciao, mi chiamo Paolo!"),

HumanMessage(content="What is the distance between Mexico and Thailand. Also, what is your name?"),

]

chat.predict_messages(messages)

========================================================================================

3. prompt template

template를 만들고 format 한 후 predict 메소드 호출

from langchain.chat_models import ChatOpenAI

from langchain.prompts import PromptTemplate, ChatPromptTemplate

chat = ChatOpenAI(

temperature=0.1

)

# 템플릿 작성

template = ChatPromptTemplate.from_messages([

("system", "You are a geography expert. And you only reply in {language}."),

("ai", "Ciao, mi chiamo {name}!"),

("human", "What is the distance between {country_a} and {country_b}. Also, what is your name?"),

])

# format

prompt = template.format_messages(

language = "Greek",

name = "Socrates",

country_a = "Mexico",

country_b = "Thailand",

)

# 출력

chat.predict_messages(prompt)

========================================================================================

4. LangChain expression language(표현언어)

chain에 template, chat, 출력형식을 정해주고 invoke 함수를 사용해 코드를 좀 더 단순화할 수 있음

from langchain.chat_models import ChatOpenAI

from langchain.prompts import PromptTemplate, ChatPromptTemplate

from langchain.schema import BaseOutputParser

chat = ChatOpenAI(

temperature=0.1

)

class CommaOutputParser(BaseOutputParser):

def parse(self, text):

items = text.strip().split(",")

return list(map(str.strip, items))

template = ChatPromptTemplate.from_messages([

("system", "You are a list generating machine. Everything you are asked will be answered with a comma separated list of max {max_items} in lowercase. Do Not reply with anything else."),

("human", "{question}"),

])

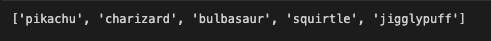

chain = template | chat | CommaOutputParser()

chain.invoke({

"max_items" : 5,

"question" : "what are the poketmons?"

})

========================================================================================

5. LangChain expression language(표현언어) 심화

chain = template | chat | CommaOutputParser()

template형식 -> chat의 값 -> CommaOutputParse 출력형식

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.callbacks import StreamingStdOutCallbackHandler

chat = ChatOpenAI(

temperature=0.1,

# 실시간으로 gpt의 답변이 생성되는 것을 확인 가능

streaming=True,

callbacks=[StreamingStdOutCallbackHandler()]

)

chef_prompt = ChatPromptTemplate.from_messages([

("system", "You are a world-class international chef. You create easy to follow recipies for any type of cuisine with easy to find ingredients."),

("human", "I want to cook {cuisine} food."),

])

chef_chain = chef_prompt | chat

veg_chef_prompt = ChatPromptTemplate.from_messages([

("system", "You are a vegetarian chef specialized on making traditional recipes vegetarian. You find alternative ingredients and explain their preparation. You don't radically modify the recipe. If there is no alternative for a food just say you don't know how to recipe it."),

("human", "{recipe}"),

])

veg_chain = veg_chef_prompt | chat

final_chain ={"recipe":chef_chain} | veg_chain

final_chain.invoke({

"cuisine" : "indian"

})

final_chain ={"recipe":chef_chain} | veg_chain

final_chain.invoke({

"cuisine" : "indian"

})

해당 코드를 통해 chef_prompt에서 받은 chef_chain의 레시피를 veg_chain의 recipe 변수 값에 담아

두 템플릿 형식을 하나의 답변으로 받을 수 있다.

'공부 > AI' 카테고리의 다른 글

| GPT_3(노마드코더) (0) | 2024.01.18 |

|---|---|

| GPT_2(노마드코더) (0) | 2023.11.30 |

| 모델 분류 성능 평가 지표(Classification Metric) (0) | 2023.10.02 |

| AI 기초 실습 (0) | 2023.09.06 |

댓글